Making Intelligent Systems Safe, with Mathematical Certainty

Safety depends on the system an artificial intelligence is embedded in.

Ensuring safety in complex AI systems requires indentifying where risks originate, from the software governing its operations to the physical environment it controls. Safety emerges (or fails) from the interaction between many components — not from an AI in isolation — and requires managing risks at different levels of abstraction.

- Modelling risks arise from erroneous or overly stringent assumptions about the digital or physical world in which a system operates.

- Alignment risks arise from the discrepancy between a system’s behaviour and the behaviour intended by the designer, user, or regulator.

Protecting people from flawed systems, and systems from malicious people.

We take a holistic approach that ensures that systems are built to prevent harm to individuals (safety) and defends systems against deliberate attacks and misuse (security). We recognise that safety and security are distinct but inseparable: by integrating both, we create resilient systems that serve and safeguard everyone.

- Safety prevents system flaws or bugs from potentially harming people or the wider environment.

- Security prevents malicious actors from attempting to control or break the system or steal data.

Empirical evidence isn’t enough — safety demands formal proof.

Traditional evaluation practices demonstrate that systems work in known cases, but they don’t rigorously reason about every possible scenario from first principles. That’s why we develop formal verification technology to prove systems behave as intended, providing mathematical guarantees — absolute or probabilistic — and confidence much beyond what testing alone can offer.

- Where total assurance is not negotiable, we guarantee with absolute certainty that systems behave according to their intended behaviour.

- Where failure to comply must be tolerated within acceptable margins, we guarantee probabilistically that systems work as expected most of the time.

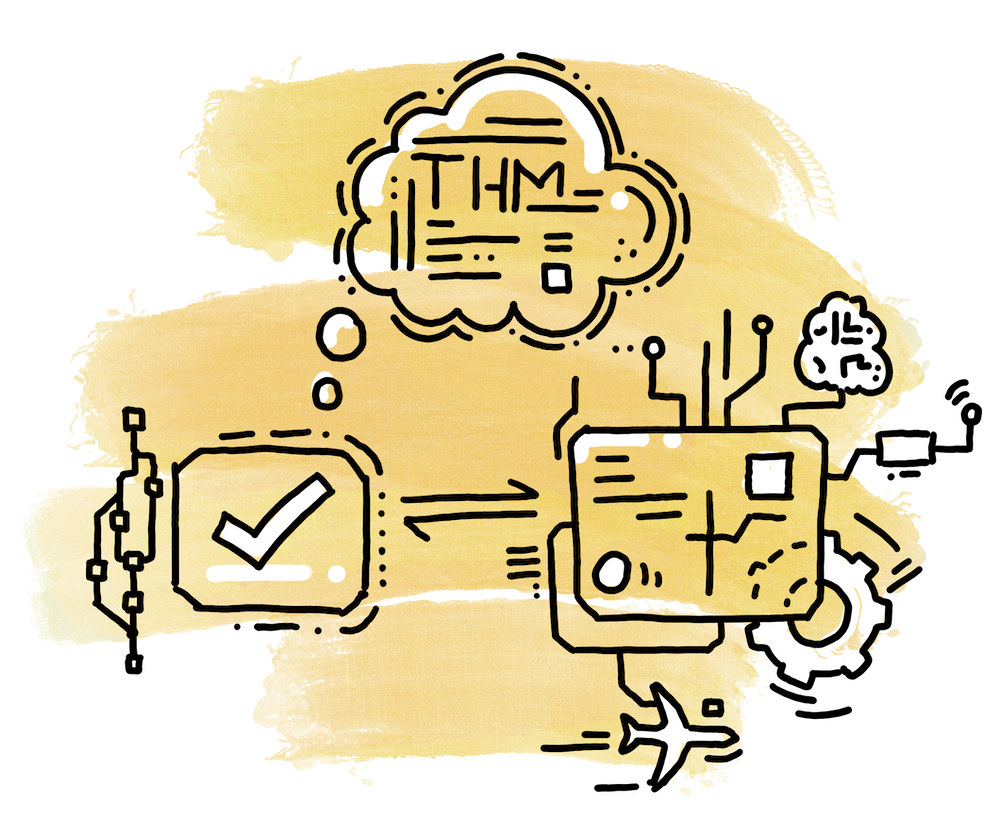

Our Thesis

Combining Machine Learning and Automated Reasoning

We harness two complementary (but unfortunately often siloed) AI paradigms: machine learning — rooted in data and experience, extremely powerful but today not auditable — and automated reasoning — rooted in rules and logic, mathematically trustworthy but traditionally not as scalable.

Our approach makes learning and reasoning work at the service of each other, combining the best of both worlds. We build upon three technical pillars, which correspond to the phases of a new workflow for AI safety with formal guarantees.

Mathematical Modelling

We enable engineers to create auditable models of the deployment environments and formal specifications of safety and intended behaviour for the system — models and specifications flexible enough to vary in accuracy and remain useful (and rigorous) even when imprecise or underspecified.

Formal Certification

We develop the technology to enable machine learning algorithms to generate not only decision-making policies but also machine-checkable proof certificates formally witnessing their compliance with the modelled safety requirements

Continual Monitoring

We provide the infrastructure for monitoring and controlling AI systems after deployment, to assure their ongoing compliance with safety specifications and validate modelling assumptions.

Academic Experts

We have world leading scientists in the fields of AI, formal verification, and computer security, with publications in top-level peer reviewed journals.

Industry Experience

Our team brings extensive industry experience in AI, software engineering, and cyber security.

Publicly funded

We are proud to have been awarded the Safeguarded AI TA1.2/1.3 grant to advance safe and trustworthy AI.

Building Technology for Everyone

We are a non-profit organisation dedicated to formal methods and artificial intelligence research, committed to building open infrastructure for the safety assurance of algorithmic systems, for the benefit of all.

Contact us!

Funders and Partners